Tips For Deploying Advanced Modeling In Commercial Phase Bioprocessing

By Benjamin Bayer, bioprocess modeling expert

Bioprocess models offer enormous potential for advanced real-time monitoring of critical quality attributes, automation and control, and optimizing production efficiency and product quality. When leveraging machine learning algorithms and predictive modeling, large data sets from upstream and downstream processes can be analyzed to identify critical control points and predict system behaviors under various conditions, improving process knowledge.

Integrating these robust computational techniques with real-time data analytics enables proactive adjustments and continuous process improvements, reducing variability, enhancing yield and product quality, and reducing costs as well as ensuring compliance with regulatory standards.

While these tools, along with soft sensors, are already often being used in development to accelerate process characterization and optimization, their routine use in commercial-phase manufacturing is still eagerly awaited.

The adoption and implementation of these advanced models will facilitate a more agile and resilient manufacturing operation capable of adapting to market demands. Further positive effects of such in-silico tools should drive innovation and enhance efficiency, robustness, and scalability of manufacturing processes, while maintaining competitive advantages in the highly regulated biopharmaceutical industry. All these key benefits are making advanced modeling and soft sensors crucial components of modern biomanufacturing strategies.

However, effective deployment of advanced models requires interdisciplinary collaboration. There are many challenges on different levels to overcome before advanced modeling in commercial-phase manufacturing becomes a reality. In this article, three intertwining levels are discussed.

Pre-modeling phase

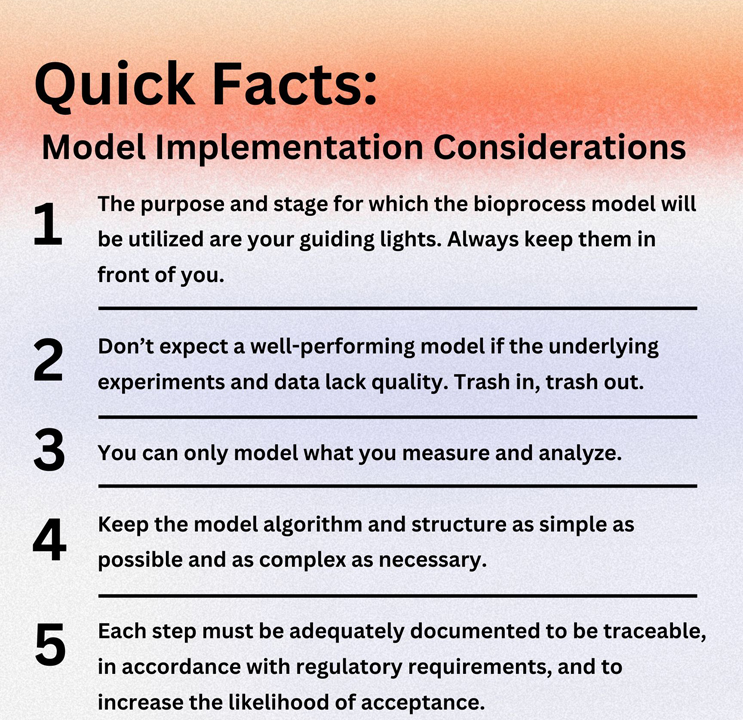

This phase comprises experimental planning and formulation of the scientific question. This early step can make or break a model, and it predicts whether it will be successful. Just like the saying “trash in, trash out,” don’t expect quality modeling from poorly characterized wet-lab experiments.

Model development phase

Previously performed, meaningful wet-lab experiments are consolidated for this phase. It deals with the in-silico tasks of finding the right algorithm, delivering the best performance to solve the scientific issue, and generating added value.

Implementation phase

This phase takes place once a robust bioprocess model is validated, solves the scientific issue, and performs well when presented with new data. Implementation is probably the most complex and crucial part of deployment since it is the least straightforward phase, dealing with existing manufacturing systems, regulatory requirements, and authorities.

Pre-modeling Phase

Hurdle 1: Undefined research question

The lack of a research question or an experimental goal is the first critical hurdle. A model is doomed from the start without a clear purpose and well-defined question to answer. Modeling goals can be manifold, such as:

- increasing product titer on harvest day,

- process characterization,

- developing a soft sensor,

- facilitating scalability,

- building an advanced process control (APC) strategy, or

- model predictive control (MPC) to optimize control actions, which maintains desired process performance even in the presence of disturbances.

Recommendation:

Spending more initial time defining clear and distinct goals reduces the overall time and workload significantly. While some advanced bioprocess models can fulfill several tasks, it is important to define the research question for which the bioprocess model will be used and consider any additional probes to be utilized in the experiments. Thereby, you avoid performing unnecessary experiments or omitting crucial ones from the start.

Hurdle 2: Suboptimal experiment planning

Even if the research question is defined and it is decided for which tasks the bioprocess model shall be used, planning subsequent experiments is key to success. Selecting too many experiments will result in an infeasible wet-lab workload and extend timelines, while too few will significantly decrease model performance by reducing its learning capabilities.

Recommendation:

Performing fewer but smarter, more meaningful experiments reduces the overall number of required experiments for model development and simultaneously increases model performance. This includes investigating the critical quality attributes and defining critical process parameters (CPPs) for the previously defined research question.

For most modeling tasks, design of experiments (DoE) has been proven to be most efficient and is the tool of choice. Setting up a design space to investigate CPPs at different levels allows for a structured approach to investigate process behavior and capture required process dynamics for robust model development. However, selecting too many CPPs or levels herein might lead to too many experiments. This means a trade-off between knowledge gain and the individual experimental capacity must be decided.

Micro-scale bioreactor systems have been proven to be a great asset for performing many experiments in parallel. However, the scalability of these systems must be kept in mind.

Hurdle 3: Underestimating the importance of frequent sampling

It feels great to successfully finish a study block. On the other hand, realizing afterward that crucial sampling timepoints are missing, or that an analyte of interest was not considered at all, produces quite an opposing emotion.

While the missing timepoints might only significantly decrease the model's explanatory power, a missing analyte could completely shut down model development.

Recommendation:

Ensuring high-quality, comprehensive, and consistent data is critical for developing accurate bioprocess models. Often, historical process data may be incomplete, noisy, or inconsistent, making model development challenging or impossible. A well-structured sampling schedule will guarantee the integrity and full functionality of the model. Frequent sampling (e.g., from inoculation to harvest at least once per day) and analysis of key analytes (e.g., cell density, viability, glucose, lactate, and product titer) instead of solely focusing on endpoint measurements at harvest produce bioprocess models that can estimate/predict time-series trends and enables advanced model features.

Hurdle 4: No biological replicates

Performing all experiments just once might reduce the workload and cover the designated design space, but it still leaves a lot of room for speculation. Without information from experimental replicates, we are groping in the dark about the reliability of our results and thus the data quality for model development.

Recommendation:

Experimental replicates are required to understand the expected process variability as well as for the expectation management of the model performance.

For example, let's say an experimental condition is repeated three times and the measured final product titer yields are 5, 7, and 9 g L-1. Since we have performed the same experiment three times but have three different outcomes, how can the bioprocess model know which experiment is correct? They all are correct, but process variability is also rather high, setting the threshold of a well-performing model.

This example illustrates how a model can never be more accurate than the experimental data it is developed with.

If a final product titer value of 7 g L-1 (±2 g L-1) can be expected, any model estimation or prediction in this interval is valid. Bad data can make a reliable and well-fit model perform poorly. When people don’t investigate variability properly, models will make naive assumptions.

Model Development Phase

Hurdle 5: Selected model structure is not fit for purpose

A model type must be chosen once all required experiments are performed and the model development, validation, and testing start. Often, model estimations or predictions are inaccurate when the model is presented with new data (test data set) even though all experiments went according to plan.

This does not automatically mean there is something inherently wrong with the data or the model. Rather, it suggests the selected model structure does not fit the intended purpose and requires rethinking.

Recommendation:

Spend time choosing a modeling algorithm considering the available experimental data such as mechanistic, machine learning, or hybrid models. Be sure that it suits the overall purpose, e.g., real-time monitoring, process control, automation, predicting future outcomes, identifying key variables affecting product quality and yield, or simulating entire processes.

Other considerations for choosing a machine learning, mechanistic, or hybrid model include:

- A machine learning model (e.g., techniques like neural networks, random forests, and support vector machines) is probably a good choice if many experiments are available since performance relies heavily on data.

- If only few experiments were conducted, mechanistic models are the better choice since these use fundamental principles and only some parameters are estimated through experimental data (e.g., predicting cell growth and product formation). Since these are typically simplified depictions of the bioprocess, mechanistic predictions are not entirely accurate. One way to increase their accuracy is to adjust the quantity of kinetic knowledge incorporated (e.g., adding additional parameters such as inhibiting terms).

- Hybrid models represent a mix of both approaches and offer great advantages by combining mechanistic and machine learning approaches. Leveraging the strengths of both enhances process prediction and control.

Please note, this is only a sampling of suggestions. How to set up those models, hyperparameters, and best practices for model validation and testing are not listed. That would fill many more pages.

Hurdle 6: Wrong input selection for modeling goal

Too many or the wrong input variables will generally impede model performance, even if previous steps were done correctly.

If all available input variables are selected, likely problems could include collinearity, missing correlation to the model outputs, and no causality.

Also, selecting unsuitable input variables for the modeling goal will lead to a malfunctioning model.

For example, if the aim is real-time monitoring of hard-to-measure variables using a soft sensor model, easily obtainable data from different probes is sufficient, e.g., spectroscopic or spectrometric data. If you try using that model to predict future outcomes, it will fail since the inputs to the model (real-time values) are only available until the current time point, and other variables must be considered.

Recommendation:

The inputs for training the model are crucial for model performance. Developing a bioprocess model for real-time monitoring is quite straightforward compared to a model used for predictions. While online variables are generally suitable for this task, collinearity and redundant variables, which do not contribute to model performance, must still be investigated and avoided.

This is also why a more complex model such as a hybrid model is somewhat overkill if only utilized for real-time monitoring. However, If the goal is a bioprocess model that can predict hours, days, or even weeks ahead, applying MPC or simulating processes, controllable variables, which can be set and manipulated at any time, are required as model inputs.

Some prominent controllable variables that can be used are the cultivation temperature, dissolved oxygen setpoint, and feeding rates. Moreover, such advanced modeling techniques often require significant computational resources, which can be an additional limiting factor.

These quick examples demonstrate why bioprocess expertise and understanding the nature of different process variables are also required to develop an advanced fit-for-purpose bioprocess model, avoiding any pitfalls on the way and ensuring its full functionality. The saying “as simple as possible and as complex as necessary” can also be used as a rule of thumb when selecting an algorithm, the model structure, and the inputs.

Implementation Phase

Hurdle 7: Limitations of existing process control systems

If a bioprocess model is to be applied in real time, such as for monitoring, automation, and control purposes, the existing process control must be robust enough to cope with this additional modeling layer. If the system does not allow for such an integration, it renders the otherwise completely functional bioprocess model powerless and useless.

A mismatch in process control systems at different process stages, sites, or between the sponsor and CMO further complicates this hurdle.

Recommendation:

Advanced models must be integrated seamlessly with existing manufacturing execution systems (MES) and process control systems to function as designed. This integration can be technically complex and requires a significant investment of time and resources.

Understanding current MES capabilities early on is crucial for expectation management. You should know as early as possible whether the MES can integrate the model, or whether you must invest in additional system upgrades.

Hurdle 8: Missing proper documentation

Documentation of all steps is required regardless of the bioprocess model being utilized from process development all the way to commercial-phase manufacturing.

A clear record could be needed for many reasons, including maintenance, reproducibility, auditability, or transparency for internal procedures or authorities. If these parts are missing and steps are not verifiable, acceptance and implementation will be restricted or dismissed.

Recommendation:

The biopharmaceutical industry is highly regulated, and adoptions require sufficient time and documentation, representing additional workload. Any changes to the manufacturing process, including the implementation of advanced modeling, must comply with regulatory requirements and often require changes in standard operating procedures and workflows. However, this initial, temporary increased effort will subsequently and substantially reduce the workload for operators.

Once successfully deployed, bioprocess models need continuous maintenance to ensure they remain accurate and reliable, which is a resource-intensive task and requires a systematic approach to monitoring model performance.

Managing these changes, extensive documentation, validation, and approval processes as well as training staff are crucial to guarantee successful deployment. By now peer-reviewed research articles and documents are available with such recommendations on how to set up a bioprocess model and its documentation, offering guidance through the process.

Conclusion

The deployment of advanced bioprocess models in commercial-phase manufacturing will facilitate many benefits such as APC or MPC to increase process efficiency, reduce variability, and improve quality control. Thus, advanced models reduce batch failures and guarantee more consistent product quality. However, until these are implemented by default, many more hurdles must be overcome first. Most of the time, the decisive factor of a bioprocess model that is not fit for purpose or not implementable is found before the development phase. A bioprocess model will perform poorly if the underlying experiments are poorly designed, analytics are missing, variability is not understood, and the research question was developed carelessly.

This article highlights some of the most prominent hurdles toward this realization. By following the proposed recommendations and adopting a thoughtful strategic approach with a commitment to continuous improvement and innovation, the path toward deploying advanced bioprocess models during process characterization and optimization through the manufacturing stage is simplified.

Important aspects not discussed here are cultural and organizational barriers that facilitate the paradigm shift toward using bioprocess models as standard tools. There can be resistance to adopting new technologies, especially in organizations with established practices and a risk-averse culture. Overcoming this resistance and fostering a culture of innovation is as critical for successful implementation and sustainable growth as the hurdles I talked about here.

More reading: More detailed information about the most common hurdles limiting meaningful model development can be found in a related peer-reviewed research article by the author: Comparison of mechanistic and hybrid modeling approaches for characterization of a CHO cultivation process: Requirements, pitfalls and solution paths

About The Author:

Benjamin Bayer has more than eight years of scientific experience working in upstream bioprocess modeling, PAT tools, and data science in academia, the startup sector, and industry. He has published numerous peer-reviewed scientific papers on these topics. His work focuses on developing and integrating advanced modeling tools to facilitate bioprocess development, characterization, optimization, and advanced process control strategies. He received his Ph.D. in bioprocess engineering, specializing in hybrid modeling and quality-by-design implementation in upstream processing. His thesis was recognized with the Award of Excellence, the national award for the best dissertations by the Austrian Federal Ministry of Education, Science, and Research.

Benjamin Bayer has more than eight years of scientific experience working in upstream bioprocess modeling, PAT tools, and data science in academia, the startup sector, and industry. He has published numerous peer-reviewed scientific papers on these topics. His work focuses on developing and integrating advanced modeling tools to facilitate bioprocess development, characterization, optimization, and advanced process control strategies. He received his Ph.D. in bioprocess engineering, specializing in hybrid modeling and quality-by-design implementation in upstream processing. His thesis was recognized with the Award of Excellence, the national award for the best dissertations by the Austrian Federal Ministry of Education, Science, and Research.